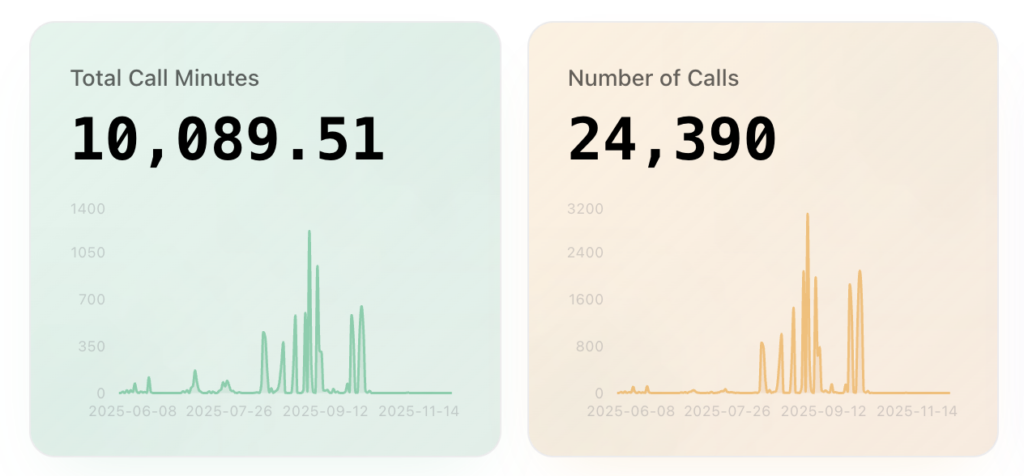

We built our own AI voice agent relay after 24,000+ sales calls

We didn’t get our breakthrough moment from writing a successful AI voice agent prompt. It came from listening to thousands of calls where the bot was stuttering, lagging, or stuck in dead air.

Those painful recordings became our data point that forced us to innovate and led us to build Dialshark from the ground up.

Back when AI voice agents were “Pretty good”

AI voice agents in 2023-24 started to look really promising.

You could spin up a demo fast and watch it glide through a mock conversation, and you’d think: Right, maybe this could actually replace and SDR now.

We used to use VAPI. And for smaller experiments it was genuinely impressive. If you needed a few hundred calls per day, a customer service bot or a pilot project, it was perfect.

We onboarded a few clients and started to push the volume on some campaigns with high agent concurrency.

And that’s when the cracks started to appear.

Outbound sales is brutal and unforgiving territory. Talking to real prospects isn’t like running evals.

They speak over you, talk fast, and are sometimes more interested in breaking your bot than the offer you are calling about!

In that environment you get no second chances. If the call quality dips even slightly, in lead generation, your CPA explodes.

When third-party voice agent infrastructure breaks

For Dialshark, this is where our story really began.

1. The concurrency ceiling

On paper the system could support high concurrency. In reality anything above a modest throughput produced some strange symptoms:

- words cutting off mid sentence

- 2-second gaps before agent response

- TTS restarting halfway through a phase

- STT misfires because audio distorted

When infrastructure fails, CPA goes up and trust goes down.

2. Poor call quality at scale

We didn’t just guess this was a problem, we heard it over and over again.

We reviewed thousands of recordings across campaigns. Many were salvageable. Many were good. But too many were unusable – calls where agents froze, or replied late, or continued to speak over the prospect because packets were arriving in the wrong order.

It wasn’t our script, and the product/offer were pretty good.

It was the plumbing between the dialler, STT, TTS, and LLM. A chain of third party hops that were never designed for sustained outbound concurrency at the scale that we needed to push it to.

And here’s the harsh lesson:

Outbound calling exposes latency in a way that a demo never does.

In a demo you got one call, perfect wifi, a warm cache, and no system load. In production you got 80 live calls, jitter, background noise, carrier variation, and real human quirks involved.

The gap between all of these components is where client ROI goes to die.

3. The reliability blind spot

So one of the biggest misconceptions in the early AI agent wave was this:

Better LLM = Better agent.

We learned it was the opposite to this – If your system prompt is well designed, the LLM is rarely what fails.

In contrast what we found was that failures were far more likely to be cause by timing, packet order, and other issues with the relay.

Our worst calls weren’t bad because they were poorly scripted. They were bad because they were laggy calls. The prospect would say “Hello?” and the agent would hear it a full second later. By the time it answered the prospect had hung up.

The model was mostly reliable but the infrastructure most certainly our biggest point of failure by a long shot.

Why our pilot voice agent was unsustainable at scale

We built Dialshark not because VAPI support was limited, or because we wanted to reinvent the wheel. The issue wasn’t support, more a case of vendor responsiveness.

It was fundamental: reliability and cost made third‑party infrastructure economically unworkable at the volume needed to guarantee client success.

Our client’s chance of lead-gen success depends mainly on one thing:

CPA (Cost per acquisition)

If your infrastructure has high latency at scale, CPA will rise.

If call quality drops, CPA rises.

If dropped calls increase, CPA rises

We started to realise that we had a huge problem. No clever prompting would fix what was effectively a quality of service problem.

We had a hard decision to make:

We either stay stuck at small scale forever, or we build our own relay.

The choice pretty much made itself.

The decision to build a custom voice agent relay

The final straw was when we were running an appointment setting campaign for a client in renewable energy.

After reviewing tonnes of broken calls, we found things like:

- the prospect says “hello?”

- silence

- silence

- then the TTS reads the wrong sentence

- then the conversation freezes up

- and the prospects bounce!

If you’re running a hobby project, these are novel bugs to have. But when you are running an outbound operation tied to real ROI, these things are deal breakers.

We projected the numbers, looked at concurrency forecasts, we heard what our clients were saying.

It became obvious we had to own the relay, the audio pipeline, timing loop and failure modes.

Now there would be no more waiting 3+ days for VAPI to reply to my support query. It was time for us to say a fond farewell – the only path to reliability was through ownership.

The relay layer: The bit no-one really talks about

When people think of AI voice, they picture:

- the agent’s tone

- how fast it answers

- now “human” it feels

But what actually determines success is something far less glam.

The relay stack

The part that moves audio, text, and timing between components.

Here’s a simple mental model:

Prospect Speech

↓

Carrier Audio

↓

[ RELAY LAYER ]

↓

STT → LLM → TTS

↓

Agent Speech

In a lot of third-party stacks, the relay layer is invisible because it’s distributed across different vendors. There isn’t a single arbiter of timing or quality.

Each hop adds latency and consequently a single point of failure.

Owning the relay however, means you have better control of:

- timing

- jitter tolerance

- packet flow

- backpressure

- buffering

- interruption handling

- stream priority

These are the areas that we invested heavily in, and as a result it’s where our biggest transformation took place.

What 24,000+ outbound voice agent calls taught us

Once we had rebuilt our core infrastructure the main patterns became obvious to us.

1. Reliability is the true category gap

Everyone is marketing realism. Nobody was marketing uptime, jitter control, or concurrency stability.

Yet these were the only things that would determine whether or not a campaign succeeded.

2. High-volume outbound breaks weak assumptions

Anything that works at 5 calls will break at 50 calls. That what works at 50 calls will jitter at 500. These weird behaviours are where ROI is truly lost.

3. Latency is the silent killer

Bad tone? Prospects can miss it. Even a slightly robotic voice – The prospect still listens. But a bot that repeatedly skips and takes 2 seconds between conversation turns.. The prospect just hangs up.

Human tolerance for delay in phone conversations is microscopic.

4. Cost matters more at scale

Buying SST+TTS+LLM+telephony à la carte is fine at 100 calls. But at 10,000 calls it gets expensive.

The bottom line was our margins were getting eaten by provider markups we couldn’t control. Margins are what dictate whether clients keep running campaigns with us.

5. CPA is the ‘North Star’

At the end of the day, nobody buys an AI sales agent because it sounds clever. They buy it because it lowers the cost of putting qualified opportunities in the pipeline.

CPA is king because it compresses everything that matters into one number: connect rate, call quality, handling time, conversion, and how many leads you quietly burn along the way.

The difference between Dialshark relay and VAPI

Before, we had latency accumulating at every step.

Call Carrier

↓

Third‑Party Audio Stream

↓

STT Vendor

↓

LLM Vendor

↓

TTS Vendor

↓

Back Through Each Hop

↓

Prospect

After, everything was more predictable, observable, and fail‑safe.

Call Carrier

↓

Dialshark Relay (Own Streams, Timing, Buffering)

↓

Internal STT + LLM + TTS Pipeline

↓

Prospect

The reliability checklist we use now

Here is the checklist we use to reach our goal of providing a stellar quality of service to clients:

[ ] Call connects under 200 ms

[ ] Round‑trip audio stays < 700 ms under 50 concurrent calls

[ ] Jitter < 3%

[ ] Packet loss gracefully handled

[ ] Barge‑in processed within 200 ms

[ ] No cumulative buffering across call duration

[ ] Carrier → Relay → STT pipeline logs fully traceable

[ ] Auto-fallback STT/LLM/TTS pathways available

[ ] Cost per min remains predictable

[ ] CPA tracked per campaign, not per call

This is how we keep campaigns stable even at industrial-scale volume.

The real reason we built Dialshark

We built Dialshark because our clients deserve predictable CPA, and no amount of clever prompting or conversation design can compensate for broken timing.

When infrastructure is flaky, you pay for it twice: once in wasted dials and again in a rising CPA as prospects churn after a bad first impression.

Reliability isn’t a feature, its the business model.

Apply to Join the Dialshark waitlist