AI voice agent performance: Lessons hidden in the call patterns

We thought that the key to a successful AI voice agent was to simply measure its booking rate. It turns out, the real story lived inside the call patterns.

Timestamps, latency, missed callbacks. This is where we found the true differentiators.

Why call-pattern analysis matters with voice agents

If you’re only tracking calls per day, you’re flying with a bug in your eye.

Most outbound teams already know their call volume. Typically amongst our clients we see orgs making 50-100 connects per human agent, per day.

What they don’t see is the behaviour under load.

- when agents slow down

- when callbacks slip

- when fatigue sets in

That’s what call-pattern analysis revealed – where an AI voice agent can outperform, or underperform, in ways your dashboard doesn’t show.

So we dug in and pulled 10,000 outbound call records, built graphs and plotted the data.

How we tracked our voice agent performance

We built our own instrumentation layer to log every heartbeat of an outbound call.

Metrics we watched:

- Dial tempo – calls per hour, per agent

- Speed-to-dial latency – time from end of one call to start of the next

- Connect-time windows – when calls were actually answered

- Missed-conversation patterns – voicemail vs successful pickup rates

- Callback latency – time until a scheduled callback was actually placed

- Disposition data – not just “meeting booked”, but “callback”, “no answer”, “DNC”

- Uplift vectors – meetings per 1k dials, contact rate, time-to-contact, follow-up success

Now every dial became a data point, and every silence became a valuable lesson.

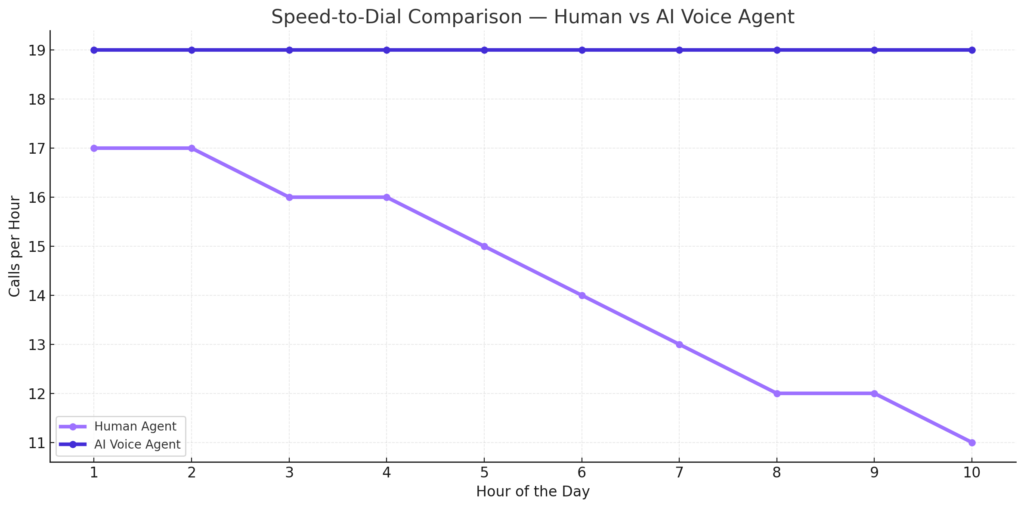

AI voice agents: The speed-to-dial race is on

When you have a large list of prospects to call, having your most valuable reps spending time dialling voicemails is not the most efficient way to run outbound.

We plotted put our average latency between calls across a 10 hour day.

Human agent pattern:

- Start strong – 15-18 calls per hour in the AM.

- By 3pm it drifts towards 10-12 calls.

- It’s the classic fatigue slope.

AI voice agent pattern:

- Flat pace throughout the day.

- 18-20 calls per hour.

- No slope in performance.

Humans started strong fuelled by coffee at 15–18 calls/hour, then they fade to 10–12 by mid-afternoon. The AI agent just stays chill and steady at 18–20 all day.

What humans do best vs what AI voice agents should do more

| Task | Human Agent | AI Voice Agent |

|---|---|---|

| Emotional rapport, humour, tone-reading | Real empathy, can pivot mid-sentence | Limited, semi-rigid |

| Consistent dial pacing | Fatigue, distractions, breaks | Perfect pacing, 24/7 availability |

| Compliance nuance, objections, empathy | Adjusts based on trust and intent | Escalates fast, risk of robotic tone |

| Volume & coverage | Slows with load | Never tires, scales instantly |

| Data capture & call logging | Error-prone | Perfect recall, structured logs |

Currently as it stands in voice AI in sales, Humans win the conversation and close, but AI wins on consistency and it’s ability to collect, analyse and store data like an absolute boss!

Together, knowing these things can stop you wasting your effort in the wrong places when you’re building AI voice agents for outbound sales.

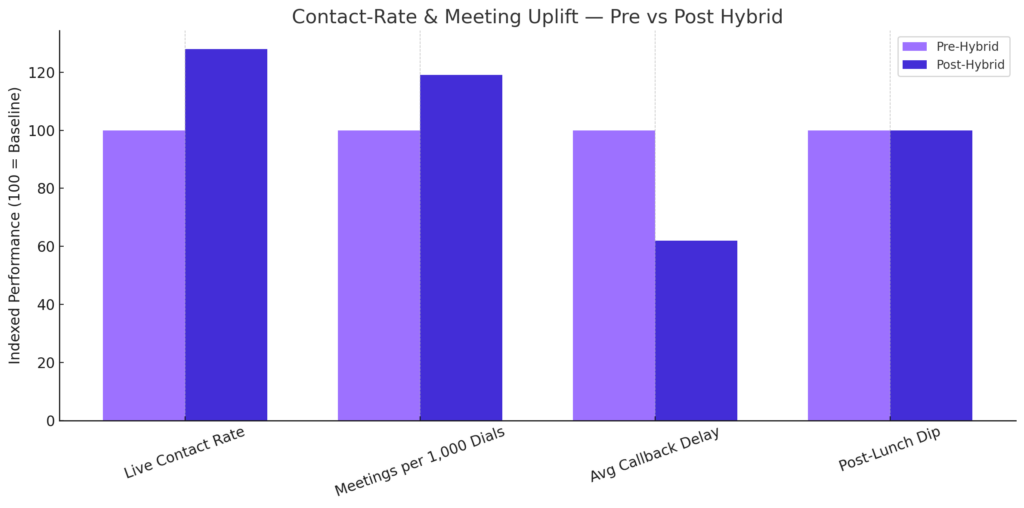

The hybrid model changes everything

Dialshark lets you decide whether your voice agent sets an appointment with your prospect or instantly escalates to a human via live call transfer.

After 2 weeks running a mixed queue (AI handling low-value dials, humans taking over live connects showing intent) our metrics showed:

- Live contact rate: ↑ 28%

- Meetings booked per 1,000 dials: ↑ 19%

- Average callback delay: ↓ 38%

- Post-lunch productivity dip: Flatlined

Where pure voice agent automation can fail

A lot of teams ask, “Why not just let the AI handle everything?”

Here’s why that usually breaks:

- Deep objections — AI struggles with nuance like “we’re under contract” or “call back in Q3”.

- Regulatory nuance — opt-outs, tone-sensitive sectors, legal disclaimers.

- High-trust industries — finance, healthcare, or any space where voice equals brand.

Many teams try to let AI voice agents handle everything, but that’s where it breaks down. AI is excellent at scale and speed, yet struggles with empathy, nuance, and compliance.

When objections or sensitive topics arise, it follows scripts instead of signals. The win isn’t full automation – it’s letting AI handle the grind while humans handle the moments that matter.

Operational lessons from analysing call patterns

- Fatigue is invisible without timestamps.

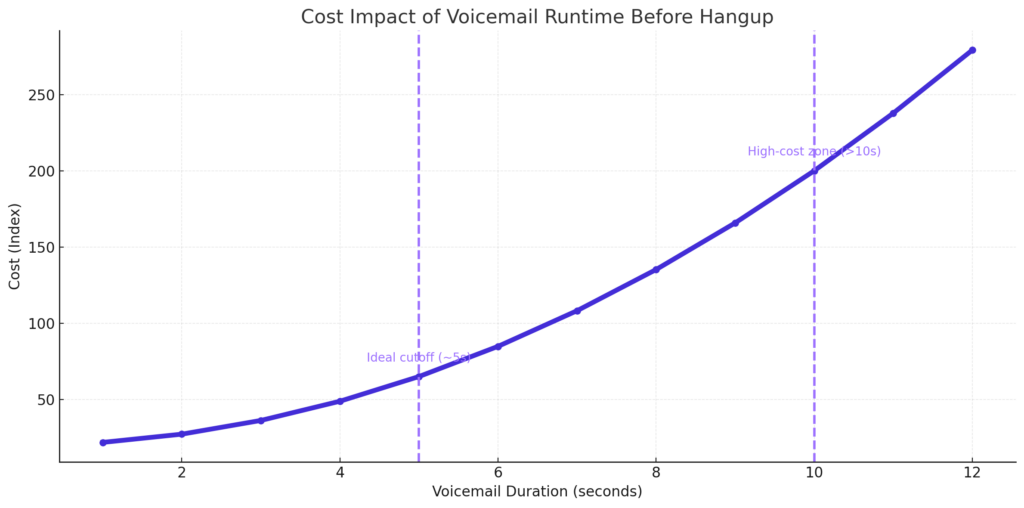

Hourly graphs show when humans really need a break, and where to insert AI coverage. - Voicemail detection matters.

Incorrectly dispostioning voicemails or keeping your agent on the call will cost you big. - Callbacks are gold.

Auto-scheduling callbacks gave us double digit uplift with zero script changes. - Speed trumps all.

The shorter the gap between calls, the more likely you get the prospect on the call. - Humans convert, AI scales and covers.

AI keeps the pipeline moving, and humans close the loop.

Common pitfalls (and how you can dodge them)

1. Mis-labelled dispositions

If your AI voice agent logs voicemails as ”no answer”, you’ll spend days chasing ghosts. It’s one of the most expensive silent errors in outbound.

This is why your voice agent needs to pick up on subtle cues like tone, semantics, and VAD. Don’t rely on generic detection models, and make sure your agent can end calls early to avoid unnecessary spend.

2. Ignoring timezone bias

AI might not get tired but humans do – and so do your prospects.

We’ve seen it where teams proudly show off 24/7 dial chats without realising that half of their calls are landing at 8pm, getting flagged as spam, or hitting endless voicemail loops.

Timezones are one of the most overlooked optimisation layers. Map your target market’s activity windows, then run the dialler to prioritise live hours.

Once we did this, answer rate spikes, and our number health improved too.

3. Over-dialling the same list

Even the best AI pacing logic can’t save you if you’re hammering the same contacts too often.

Over-dialling is how you torch your caller ID reputation, exhaust good data, and start triggering spam flags that even new numbers inherit.

Th trick is to add cooling periods to your workflow: 24-48 hours between retries. Build opt-out detection and ensure your voice agent stops calling the moment intent is negative.

Re-defining what AI voice agent “success” means

A lot of teams are out there chasing volume, they brag about dial counts and automations.

We define success very differently – and we lead every client through that same lens. For us, outbound isn’t a numbers game, it’s a unit economics game.

Every deployment is designed around one thing:

Client CPA – cost per qualified lead or booked meeting.

Because a $5k voice agent isn’t worth it if it just breaks. It has to make your pipeline cheaper, cleaner, and more predictable.

At Dialshark we measure cost per conversion – and then build the system to drive that down.

We know a fully supported, data-led outbound framework is what makes sure every client hits the KPI we agree on before launch.